Enterprise Experience for Developers: Unlock $150K Remote Jobs

Enterprise experience for developers isn’t about big logos. Learn how LATAM developers can transform portfolios and land $150K US remote roles. Start now.

Read More

Hiring senior engineers is one of the most consequential decisions an engineering leader makes. A mis-hire at that level doesn’t just slow a team — it costs time, morale, product velocity, and ultimately market opportunity. Yet many companies still lead with coding tests that feel rigorous but provide little insight into the real capabilities senior engineers need to deliver in distributed teams.

In this article, you’ll learn:

This is not ideological — it’s operational.

The coding test emerged as an objective filter when companies had large candidate volumes. But that original use case (junior screening) is not the same as evaluating senior engineers.

Senior engineers are not hired to solve puzzles under time pressure. They’re hired to understand ambiguity, make architectural decisions, collaborate across boundaries, and ship quality outcomes over time. Coding tests reduce evaluation to speed and recall — a poor proxy for what matters in senior work.

That’s why senior candidates often disengage — not because they lack skill, but because the test signals the wrong thing.

If your hiring process screens out experienced engineers simply because they won’t or can’t perform in a low-context coding drill, you’re filtering for the wrong success criteria.

To understand why coding tests underperform, let’s unpack the real competencies that predict senior performance:

Senior engineers make trade-offs every day: reliability vs speed, decoupling vs maintainability, scale vs simplicity. These decisions are rarely binary and require context, not puzzles.

Each of these moments influences long-term cost and velocity.

Coding tests are not designed to assess trade-off reasoning at scale.

Senior hires are evaluated not on what they produce quickly, but on how they think:

These deeper signals rarely emerge in algorithmic drills.

In remote environments, communication is execution. Articulating decisions to stakeholders, aligning cross-functionally, mentoring juniors, and defusing disputes are part of the job.

Generic technical tasks don’t surface these capabilities — but observational evaluation does.

Senior engineers diagnose production incidents, refactor legacy systems, and make decisions under uncertainty. This “in-the-trench” judgment is not tested by isolated coding puzzles.

Most teams use coding tests because they’re easy to scale. That ease, however, is not the same as validity. Hiring teams often believe more data = better decisions — but if the data isn’t the right signal, it becomes noise.

Senior engineers may refuse tests because:

When this happens, hiring teams either press on with low-signal data or unintentionally lose the very candidates they want most.

Neither option is tenable.

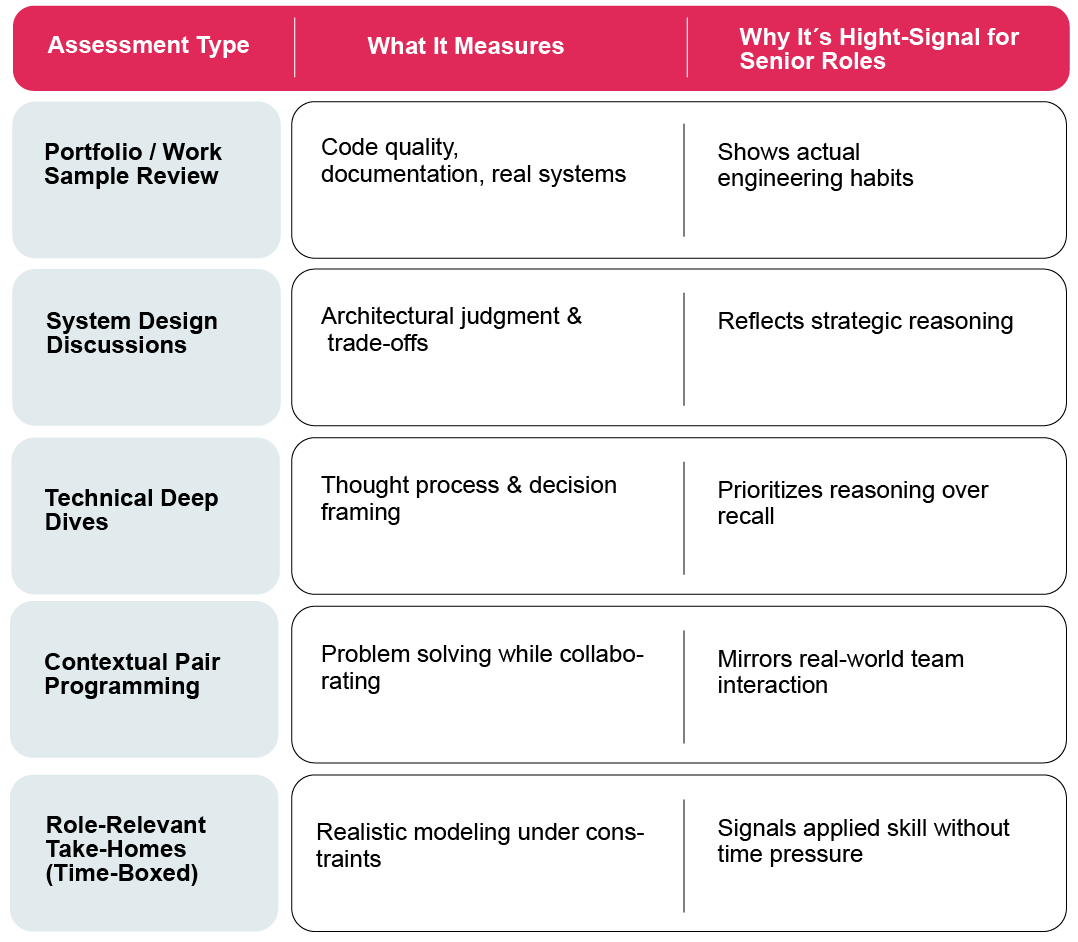

Replacing coding tests doesn’t mean losing rigor. It means redesigning evaluation around meaningful predictors of success:

These alternatives give insights into how someone works — not just what they can remember. They scale with discipline and structure while generating far stronger predictive power.

A common objection is that coding tests save time. But scalability without useful signals is operational waste — especially once hiring mistakes at the senior level cascade into project delays, team churn, and costly rework. The time you think you save early often becomes time you lose later when your new hire isn’t aligning with real engineering expectations.

Instead of thinking in terms of “fast vs slow,” think in terms of predictive value per signal: how much insight does each stage give you into a real engineer’s ability to contribute on day one and beyond?

A practical, layered evaluation framework looks like this:

This progression weeds out weak fits early and aligns candidates with the actual demands of the role. It also preserves velocity in the funnel by substituting low-signal automation with high-quality human-anchored conversations that reveal performance predictors you can’t see in coding tests alone.

Moving away from algorithm drills isn’t about lowering the bar — it’s about raising the relevance of your signals. When the evaluation reflects the way senior engineers actually work, your hiring signals will predict performance.

The most expensive hiring mistake isn’t the wrong hire.

It’s the decision made using signals that feel rigorous but don’t reflect real impact.

Senior hiring is not a checklist — it’s a risk-managed process. Senior engineers want to be evaluated in ways that respect their experience and match the challenges they’ll face day one. Your process should do the same.

Real hiring signal isn’t about how fast they solve a puzzle — it’s about how they lead and deliver when it matters most.